What's new

First version (1.0)

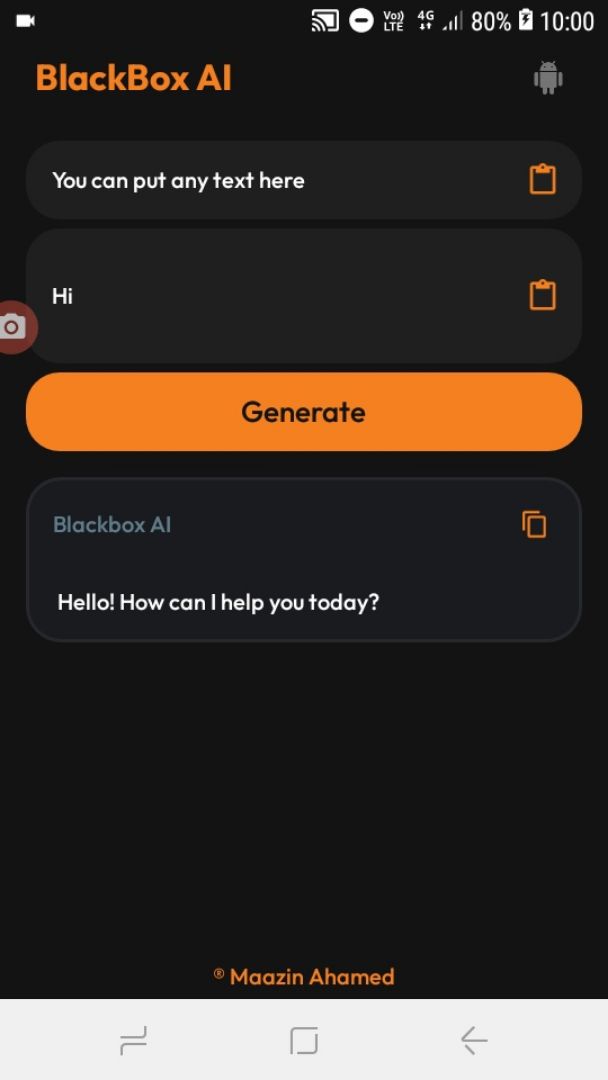

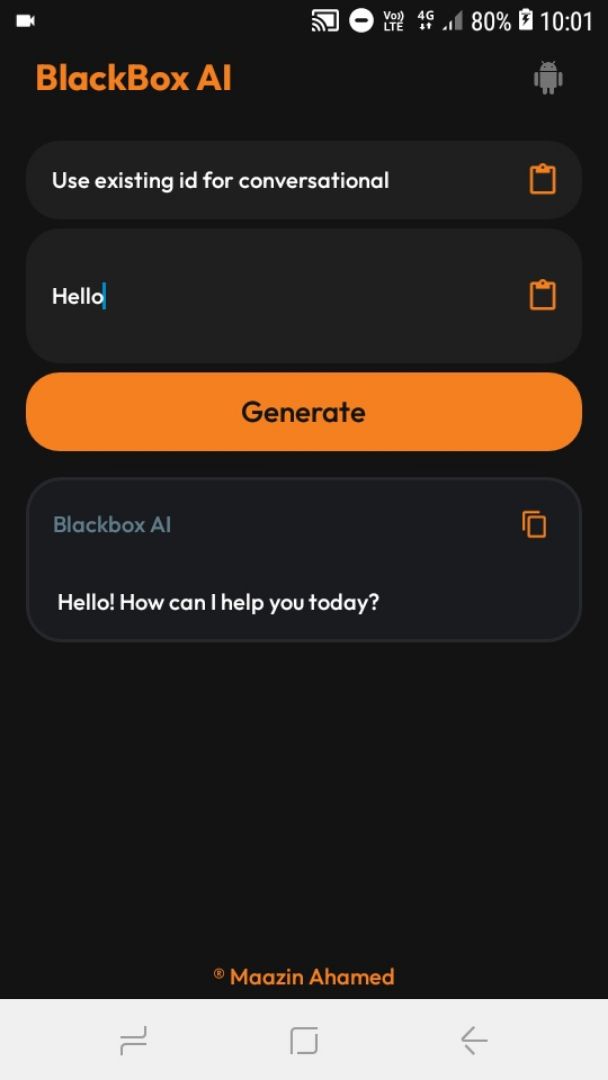

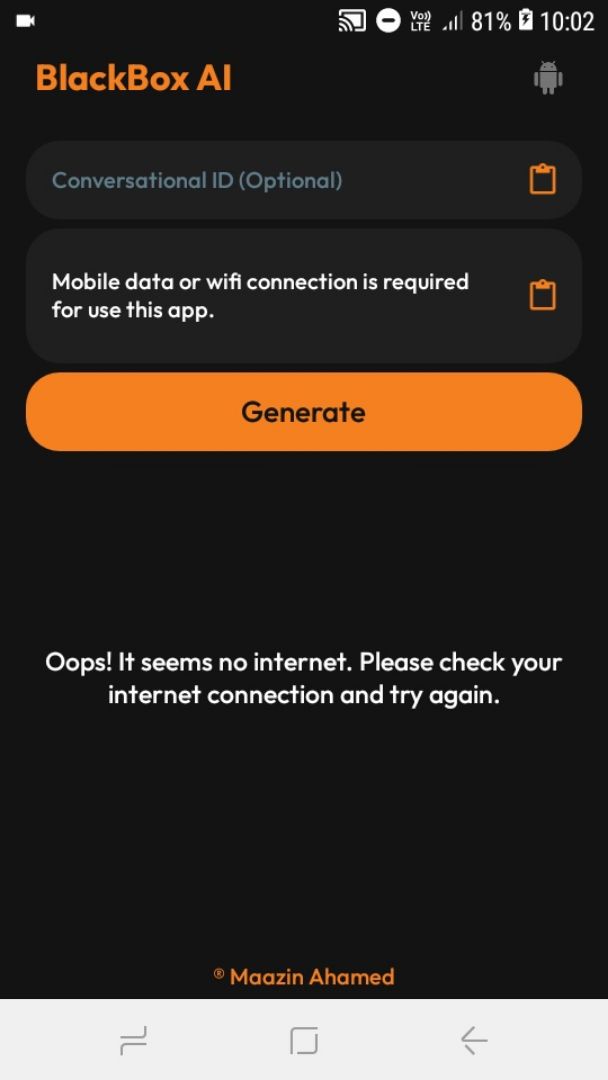

Screenshots

About

Let's talk about instruction-tuning and RLHF. Instruction-tuning is a technique used to fine-tune a language model by providing it with specific instructions or examples of desired behavior. RLHF stands for Reinforcement Learning from Human Feedback, which is a method of training a language model by rewarding it for generating responses that humans find helpful or informative.

In my case, I have been fine-tuned with both instruction-tuning and RLHF, which means that I have been trained to provide accurate, factual, thoughtful, and nuanced answers to questions. I am also able to reason and explain my thought process, and I will always spend a few sentences explaining background context, assumptions, and step-by-step thinking before I try to answer a question.

As for who I am, I am a large language model that has been developed by a team of researchers. I am designed to be a general-purpose language model, which means that I can be used for a wide variety of tasks, including answering questions, generating text, and translating languages. I am still under development, but I am constantly learning and improving my abilities.

©2026 Sketchub | User Policy